Understanding Reinforcement Learning: Key Concepts and Applications

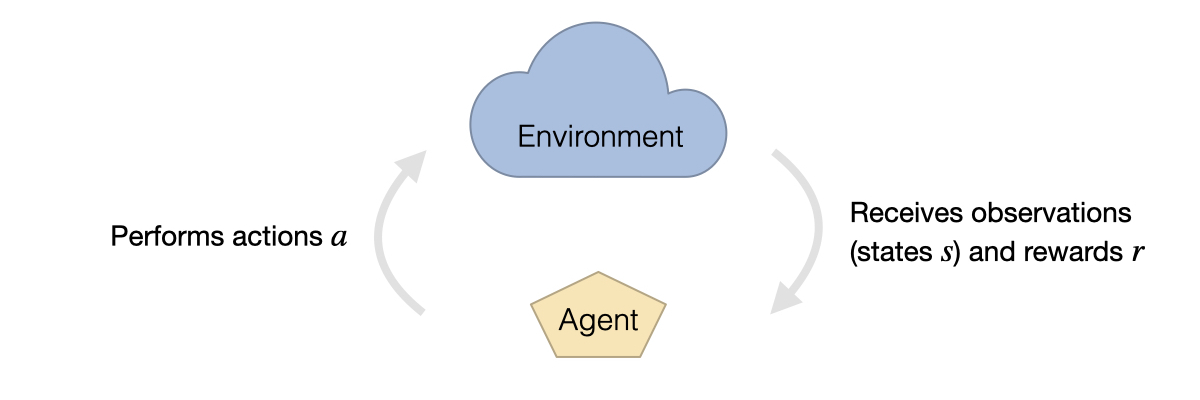

Reinforcement Learning (RL) is a fascinating field within artificial intelligence that focuses on how agents can learn to make decisions through interactions with their environment. Ideal for applications in robotics, game AI, and dynamic decision-making systems, RL leverages techniques such as Q-learning, policy gradients, and effective reward design to train models capable of adapting to complex scenarios.

Q-Learning: A Value-Based Approach

At the heart of many RL algorithms is Q-learning, a value-based approach where an agent learns to estimate the value of taking a particular action in a specific state. This is done through a process of trial and error, where the agent receives feedback from the environment in the form of rewards. The Q-value, or action-value function, essentially represents the expected future rewards from taking action a in state s. The agent iteratively updates its Q-values using a method known as the Bellman equation, gradually converging toward an optimal policy that maximizes cumulative rewards.

Policy Gradients: Direct Strategy Optimization

Another popular paradigm in reinforcement learning is the use of policy gradients. Unlike Q-learning, which seeks to learn the value of actions, policy gradient methods directly optimize the policy—the strategy employed by the agent to decide its actions. By utilizing the gradient of the expected reward with respect to the policy parameters, agents can adjust their policies incrementally. This approach tends to work well in environments with high-dimensional action spaces and has been instrumental in achieving success in complex tasks such as playing video games and controlling robots.

Reward Design: Crafting Effective Feedback

Essential to both Q-learning and policy gradient methods is reward design, which involves crafting the feedback signals that drive the learning process. A well-designed reward system can significantly enhance the agent's learning efficiency and effectiveness. For instance, in a robotic manipulation task, a sparse reward might not provide adequate guidance, leading to inefficient exploration. Thus, nuanced reward shaping can incentivize desired behaviors and help the agent discover more effective strategies. However, it is crucial to strike a balance; overly complex or misleading rewards can lead to unintended behaviors or reward hacking.

Environments and Benchmarking

The environments in which RL agents operate are also critical to their success. Platforms like OpenAI Gym provide standardized benchmarks for evaluating RL algorithms across various tasks, from simple games to complex robotics challenges. These environments serve as a playground for researchers and developers, allowing them to test and compare different reinforcement learning techniques against a common set of challenges. The simplicity of OpenAI Gym's API makes it easy to create custom environments, facilitating experimentation and tailored learning experiences.

Real-World Applications

Applications of reinforcement learning are burgeoning across various industries. In robotics, RL algorithms are used to teach robots how to perform tasks such as grasping objects or navigating environments autonomously. In the gaming industry, RL helps create AI opponents that learn and adapt to human strategies, leading to more engaging and challenging gameplay experiences. Moreover, in sectors like finance and healthcare, dynamic decision-making systems powered by RL can optimize resource allocation and enhance predictive analytics.

Conclusion

In conclusion, reinforcement learning is a powerful and versatile approach that thrives on concepts like Q-learning, policy gradients, and thoughtful reward design. With the continued development of enriching environments, such as those provided by OpenAI Gym, the potential applications for RL in robotics, game AI, and beyond are vast and promising. As this field evolves, it paves the way for creating intelligent systems that can learn and adapt in real-time, fundamentally changing how we interact with technology.